It's time for 2-D

Michael Herf

April 3, 2000

I'm excited about some of the new features of 3-D hardware, but it's not enough yet. All the polygons and fill rate are great, but there's not enough focus on quality and perception. There are certain effects that are relatively easy to do in software that haven't moved to hardware yet, and it's about time.

Idiot Savant

Last October, someone on a message board I subscribe to asked this stupid question:

"Why doesn't 3-D look as good as Photoshop?"Laugh all you want at the naïvete; but it's a really brilliant question. Why indeed?

What has to happen

My thesis is that high-quality, flexible 2-D in hardware will be the glue that brings 3-D to the desktop.

This article is my list of the features that would give UI an opportunity to move forward, would make visualization of information a really exciting thing, and would enable a new class of interesting visual effects for games.

I have a few specific challenges for 3-D hardware makers. Each of these is a relatively simple thing to build, but it would really be an explosion for people writing advanced UI — and there's a huge opportunity here.

Mixed mode rendering is a disaster, and it will be for a while, because AGP reads are so slow. No matter how fast the processors, these features cannot be added to 3-D after-the-fact.

I'll concede that everyone's ignored Microsoft's talk about GDI+ and Talisman, so I really won't make a difference. But eventually someone will decide to apply all those cycles to making the world a better place.

Yeah, no one'll listen. I just think I'm right.

Introduction

(For those of you who haven't used Adobe After Effects, go beg, steal, buy, or otherwise acquire a copy, and play with it for four hours, then keep reading.)

The first point I'll make is that we need to abolish the pixel grid. We must make a system that dynamically scales everything, dynamically filters everything with subpixel positioning, and does this in a general way. (Think text — it's the hardest.)

One of my goals is to have truly integrated motion blur, transparency everywhere, high-res images with affine transformations, images with real-life gamut (really bright, really dark), and excellent viewplane filtering, eventually including programmable pixel shaders.

Filtering not dead yet

Contrary to what some in the industry believe, I think that the golden thread running through all "high quality" graphics systems in the industry isn't quantity of polygons. It's excellent viewplane and texture filtering. This is primary to me. Avoiding aliasing buys you anything you want. Trilinear sampling of textures makes unreadable text; point-sampled viewplane filtering, well, ugh.

Here's a small feature list for the first iteration:

- Ubiquitous high-quality affine transforms: hardware support for high-quality

scaling and rotation for video and animation,

including minification and magnification.

- Antialiased vectors (lines and fills): vector art in hardware

- Special effects: Gaussian blur, motion blur

- High-gamut framebuffers

- Layers in hardware

First, we need scaled, filtered stretch blts. To make this look good, a wide filter must be used (such as a windowed sinc, or a Mitchell-Netravali bicubic.) Transparency per pixel and for edges must be supported.

We know how to do nearly perfect filtering for affine transforms (for perspective we have to approximate.) These techniques are memory and time efficient, and would be amazing for user interface.

If you want proof of why this is good, capture your screen. Go into Photoshop and scale the image to 50%. You can still read it, huh? You get the point — with good design, your desktop could effectively be 300% bigger.

The problems with current hardware are:

- Bad filtering — bilinear only (which is actually usually precomputed using just a box).

- No real hardware minification

- No good hardware magnification (only bilinear)

- Power of 2 only

- Slow uploads

Transparency is important. An alpha channel modulated by a constant will provide extraordinary opportunities for new UI innovation. OpenGL 1.2 supports modulated alpha blends, but again only with power-of-2 textures.

Finally, gamma support is necessary to make scaling and subpixel rasterization work without visual strobing. This is relatively well supported on many accelerators today, but it needs to be more standardized.

Vectors (lines and fills)

Currently, antialiased vectors can be rasterized in software using a scanline renderer as is found in Flash, or using a more traditional antialiased concave polygon rasterizer. In either of these cases, the hardware is prevented from participating in setup, transformation, or rasterization.

Hardware can help when antialiasing isn't important. Tessellation from concave to convex can be done in software, and hardware can handle the rest. Newer hardware is supporting edge antialiasing at 2x2 or 3x3 samples; I don't think this is adequate for small text rendering. 4x4 or better is necessary for that.

That being said, analytic coverage, as is supported in hardware for simple polygons today, is actually a fine solution, but it needs a small kick to be useful. Notice first that hardware supports only convex polygons — it can't reasonably be generalized to concave, since it would be an architecturally difficult thing to do.

However, coverage-based antialiasing in hardware needs a very basic change. The problem is that the Over operator that's used to blend external edges cannot work for interior edges — it leaves a seam. A general convex tessellation of a concave polygon may have several unavoidable seams using current hardware.

Troublesome concave polygon (tessellated)

The problem when it's filled

However, there's another solution, and I think it's a good one. If each polygon has a bit per edge that specifies whether or not to antialias that edge, standard fill conventions will fix the problems. Analytic methods can be used on exterior edges, and pixel rules for internal edges will work. This will allow general hardware filling of antialiased vectors and fills without a fill rate penalty.

A bit per edge can fix it — render internal edges without antialiasing, external ones with.

Image-based filters

After Effects does 16x oversampling in time to produce its motion blur effects. We have to recognize that eventually, fill rate (with accumulation buffer) will win. But for the interim, I really think that layer-based linear motion blur is the best solution. Some mid-higher-end systems, such as LightWave and ElectricImage, use only linear motion blur, and the effects are great.

Linear motion blur is cheap — the only hard part is supporting hardware layers.

If you haven't done some serious offline animation with motion blur, do some tests. The difference is stunning, unbelievable — I think it's more important than texture filtering in the overall scheme of things.

Gaussians

After logging the hours in Photoshop, I think Gaussian blurs are indispensable. My guess is that most 3-D readers will find this section foreign, but I think it might be the most important one here.

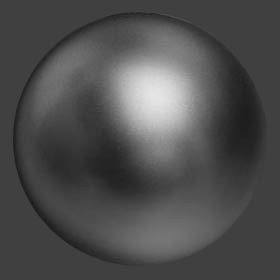

In the 3-D space, several blurs on a cubemap can be used to simulate an isotropic BRDF in real time. You can make high-quality noises using similar techniques.

Output from software-based post-processing of a reflection map

In UI, imagine being able to blur whole regions to draw attention away from them, make high-quality dynamic shadows, and you start to see the utility of hardware Gaussian blurs.

Windows UI with drop shadows and fading.

How easy is this to build? Approximations to the Gaussian can be extraordinarily fast in software, and hardware could be much faster. The data access is very compatible with existing memory systems, and a feature like this for use on a layer or in texture memory could be the largest visual difference we've seen.

Aside from its tendency to over-blur, a Gaussian is a relatively high-quality filter, and it would make a big difference to have this feature on-board.

Conclusions

I think that the missing 2-D features prevent integration of newer 3-D hardware with the 2-D desktop. In my opinion, these architectural choices were most of the downfall with Microsoft's Chromeffects project — quality and speed were well below what people were used to. On the general integration side, breaking the power-of-2 texture limitation is crucial. On the quality side, high quality filters and effects are basic and important.

I know that several projects, such as Apple's Mac OS X, and many of the next-generation Linux UI's, are approaching this problem strictly from the software side. As these implementations grow, there will be a larger and larger wall preventing true integration with 3-D geometry and more advanced acceleration features. I don't want this to happen.

I think that the combination of these ideas and abilities would make a capable and powerful next-generation desktop, and would also enhance the 3-D experience in an extraordinary way.